Recently, VTB has changed some of the hardware and software components of the workflow system. The changes were too significant to continue working without full-scale load testing: any problem with the document support system (LMS) is fraught with huge losses.

Intertrust specialists tested VTB SDO on Huawei equipment - a complex of a server farm, data network and storage based on solid-state drives. For tests, we created an environment that reproduced real scenarios with the maximum possible load. Results and conclusions - under the cut.

Why do we need a workflow system in a bank and why test it

LMS in VTB is a complex software package, on which all key management processes are tied. The system provides general documentation services, electronic interaction, analytics. A well-organized circulation of documentation accelerates management decisions, makes the procedure for their adoption transparent and controlled, improves the quality of management and enhances the competitiveness of the bank.

LMS should ensure clear implementation of decisions in accordance with the adopted regulations. This requires high performance, fault tolerance, flexible scaling. The system has high requirements for access control, the volume of simultaneously processed documents, and the number of users. Now there are 65 thousand of them in VTB SDO, and this number continues to grow.

The system is constantly evolving: architecture is changing, outdated technologies are being replaced by modern ones. And recently, some of the system components have been replaced by import-independent ones, without proprietary software. Will the new SDO architecture based on CompanyMedia software and the Huawei hardware complex cope with the increased load? Unambiguously answer this question, without waiting for a similar situation in reality, it was possible only with the help of stress testing.

In addition to checking the new software product for stress resistance, we had the following tasks:

- determine the exact parameters of horizontal and vertical sizing of servers for the bank load profile;

- check components for fault tolerance under high load conditions;

- to identify the entropy coefficient of intercluster interaction with horizontal scaling;

- try scaling read requests when using the platform balancer;

- determine the coefficient of horizontal scaling of all nodes and components of the system;

- determine the maximum possible hardware parameters of servers for various functional purposes (vertical scaling);

- to study the load profile of the application on the technical infrastructure, to approximate the results for planning the development of the information system;

- Investigate the impact of application data consolidation on a single data storage system on resource optimization, improving reliability and performance.

Methodology and equipment

Load testing of electronic document management systems is often carried out according to simplified scenarios. They simulate the quick finding and opening of document cards that are not associated with other files and do not have a life cycle history. In this case, as a rule, no one takes into account access rights and other resource-consuming factors characteristic of real conditions.

Often such divorced from reality tests are carried out by solution providers. It’s understandable: it’s important for a vendor to demonstrate to a potential client high performance and speed of the system. It is not surprising that simplified test models show record system response times, even if the number of users and documents increases significantly.

We needed to reproduce the actual operating conditions. Therefore, at the beginning, we collected statistics for a month: we recorded user activity, watched the background work of all services. The monitoring systems integrated in the LMS became a great help in this matter. Employees of the bank helped to correct the resulting data on document flows, while we made adjustments for the projected growth in flows.

The result was a testing methodology, with the help of which it was possible to simulate the processes taking place in the system, taking into account all the real loads. At the test bench, we reproduced - individually and in various combinations - the most common business operations, as well as the most time-consuming requests. During performance testing, all components were subjected to stress. Operations were performed to calculate user access rights to system objects, open documents with a complex branched hierarchy and a large number of links, search the system, and so on.

Load Testing Profile:

- registered users: 65 thousand with an increase of up to 150 thousand;

- frequency of user logins (authentications): 50 thousand per hour;

- users simultaneously working in the system: 10 thousand;

- registered documents: 10 million per year;

- volume of file attachments: 1 TB per year;

- document approval processes: 1.5 million per year;

- visas of parties to the agreement: 7.5 million per year;

- resolutions and instructions: 15 million per year;

- reports on resolutions and instructions: 15 million per year;

- user tasks: 18 million per year.

These applications were consolidated on a single storage system Huawei OceanStor Dorado 6000 V3 with 117 SSD drives of 3.6 TB each, the total usable volume is more than 300 TB. Computing power was placed on the modular server system of Huawei E9000, and data was transmitted over the network based on switches of the data center level of Huawei CE series. During the test, we round-the-clock observed all indicators of the system. All results, including historical data, were recorded in the form of graphs and tables for subsequent analysis.

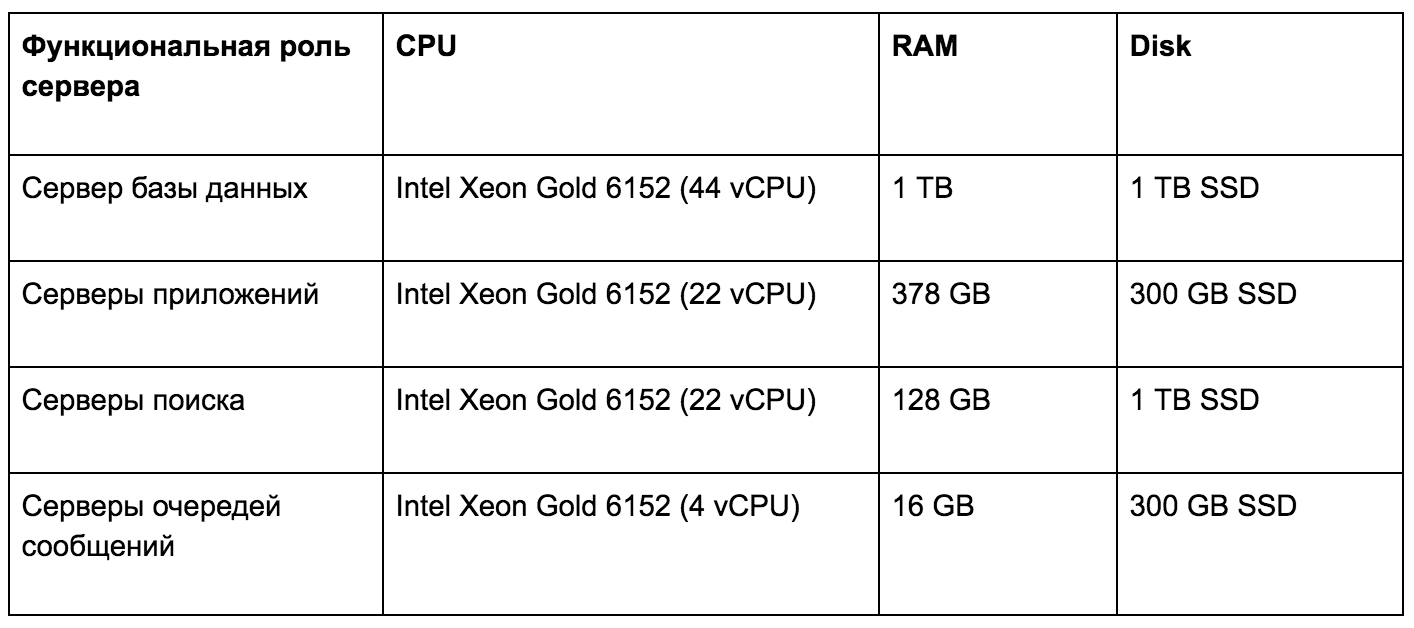

Load Test Hardware Infrastructure Servers

Due to the high performance of the Huawei OceanStor Dorado 6000 V3 storage system, delays when performing any user requests rarely exceeded 1 ms. This speed of the application’s disk subsystem inspired us to further research. We decided by analyzing historical data to determine the impact of various types of workloads on the technical infrastructure. The results obtained allow flexible and accurate planning of the development of the system in accordance with the requirements for the hardware platform.

In terms of scaling, we checked:

- limit of vertical scaling of the application server (CMJ) , resources according to the degree of criticality: the number of cores and frequency, the amount of RAM;

- support for horizontal scaling of the application server (CMJ) by duplicating functionally identical services and balancing between them;

- limits of vertical and horizontal scaling of the client server (Web-GUI) ;

- limits of vertical scaling of file storage (FS) , criticality resources: network bandwidth, disk speed;

- support for horizontal scaling of file storage (FS) due to the distributed file system - CEPH, GLUSTERFS;

- limits of vertical scaling of the database (PostgreSQL) , resources according to the degree of criticality: RAM capacity, disk speed, number of cores and frequency;

- support for horizontal database scaling (PostgreSQL) : scaling the reading load on slave servers, scaling the writing load on the principle of dividing into functional modules;

- limits of vertical and horizontal scaling of the message broker (Apache Artemis) ;

- limits of vertical and horizontal scaling of the search server (Apache Solr) .

Problems and Solutions

One of the main tasks was to identify possible problems with the performance of the LMS. During the work, the following bottlenecks were identified and ways to address them were found.

Locks synchronous logging. Logging operations in the standard WildFly configuration are performed synchronously and greatly affect performance. It was decided to switch to an asynchronous logger, and at the same time write not to disk, but to the ELK log aggregation system.

Initialization of unnecessary sessions when working with a data warehouse. Each thread that received data from the repository twice initialized a session for authentication in SSO mode. This operation is resource-intensive and greatly increases the execution time of the user request, and also reduces the overall throughput of the server.

Locks when working with application cache objects. The application used rather heavy reentrantLock locks (Java 7), which adversely affected query execution speed. The type of lock was changed to stampedLock, which significantly reduced the time spent working with cache objects.

After that, we again launched load testing to determine the average time of typical operations in the LMS system with relational storage on the bank profile. We got the following results:

- user authorization in the system - 400 ms;

- viewing the progress on average - 2.5 s;

- creation of a registration and control card - 1.4 s;

- registration and control card registration - 600 ms;

- creation of a resolution - 1 p.

findings

In addition to identifying problems, stress testing confirmed some of our assumptions.

- The system functions much better on Linux operating systems.

- The declared principles of ensuring fault tolerance work at the level of each component in a "hot" mode.

- The key component - the business logic service (accepting user requests) - has “mirror” horizontal scaling and almost linear scaling of throughput with an increase in the number of instances.

- Optimal sizing of the business logic service for 1200 simultaneous users - 8 vCPU for the service and 1.5 vCPU for the DBMS.

- Consolidation of application data on a single storage system significantly increases productivity and reliability, increases scalability.

We will be happy to answer your questions in the comments - perhaps you are interested in learning more about some aspects of testing.