One evening, when I came home from work, I decided to do a little homework. I made several edits and immediately wanted to experiment with them. But before the experiments, I had to go into the VPS, push changes, rebuild the container and run it. Then I decided that it was time to deal with continuous delivery.

Initially, I had a choice between Circle CI, Travis or Jenkins. I ruled out Jenkins almost immediately due to the lack of need for such a powerful tool. Quickly reading about Travis, I came to the conclusion that it is convenient to assemble and test in it, but you can’t imagine any delivery with it. I learned about Circle CI from overly intrusive ads on youtube. I started experimenting with examples, but at some point I made a mistake and I had eternal testing, which took me a lot of precious minutes of assembly (In general, there is a sufficient limit to not worry, but it hit me). Taking up the search again, I stumbled upon Github Actions. After playing with the Get Started examples, I had a positive impression, and after a quick look at the documentation, I came to the conclusion that it is very cool that I can keep secrets for assembly, collect and practically deploy projects in one place. With glowing eyes, he quickly drew the desired scheme, and the gears spun.

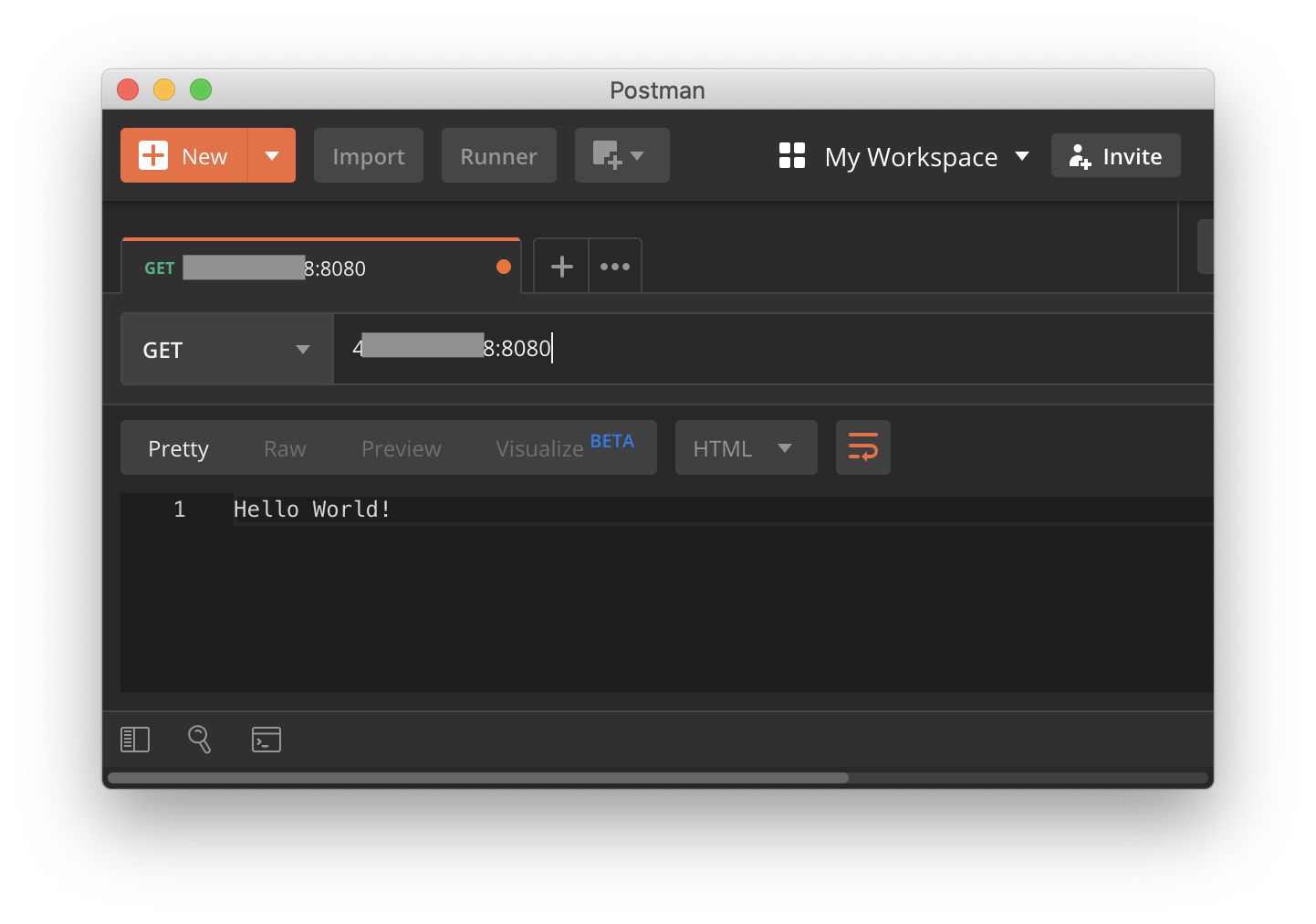

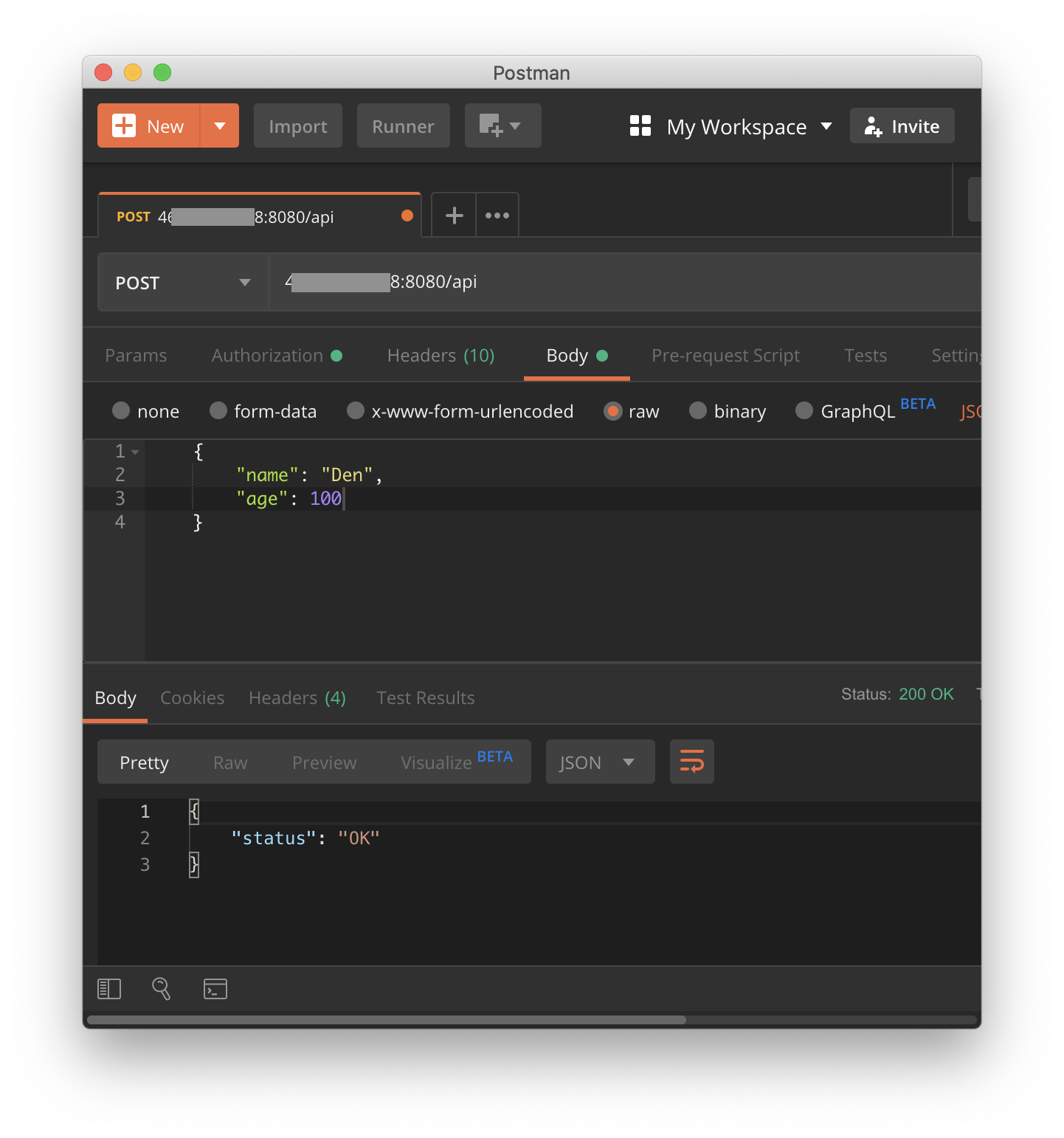

First, we will try to do the testing. As an experimental, I wrote a simple web server on Flask with 2 endpoints:

Listing a simple web applicationfrom flask import Flask from flask import request, jsonify app = Flask(__name__) def validate_post_data(data: dict) -> bool: if not isinstance(data, dict): return False if not data.get('name') or not isinstance(data['name'], str): return False if data.get('age') and not isinstance(data['age'], int): return False return True @app.route('/', methods=['GET']) def hello(): return 'Hello World!' @app.route('/api', methods=['GET', 'POST']) def api(): """ /api entpoint GET - returns json= {'status': 'test'} POST - { name - str not null age - int optional } :return: """ if request.method == 'GET': return jsonify({'status': 'test'}) elif request.method == 'POST': if validate_post_data(request.json): return jsonify({'status': 'OK'}) else: return jsonify({'status': 'bad input'}), 400 def main(): app.run(host='0.0.0.0', port=8080) if __name__ == '__main__': main()

And a few tests:

Application Test Listing import unittest import app as tested_app import json class FlaskAppTests(unittest.TestCase): def setUp(self): tested_app.app.config['TESTING'] = True self.app = tested_app.app.test_client() def test_get_hello_endpoint(self): r = self.app.get('/') self.assertEqual(r.data, b'Hello World!') def test_post_hello_endpoint(self): r = self.app.post('/') self.assertEqual(r.status_code, 405) def test_get_api_endpoint(self): r = self.app.get('/api') self.assertEqual(r.json, {'status': 'test'}) def test_correct_post_api_endpoint(self): r = self.app.post('/api', content_type='application/json', data=json.dumps({'name': 'Den', 'age': 100})) self.assertEqual(r.json, {'status': 'OK'}) self.assertEqual(r.status_code, 200) r = self.app.post('/api', content_type='application/json', data=json.dumps({'name': 'Den'})) self.assertEqual(r.json, {'status': 'OK'}) self.assertEqual(r.status_code, 200) def test_not_dict_post_api_endpoint(self): r = self.app.post('/api', content_type='application/json', data=json.dumps([{'name': 'Den'}])) self.assertEqual(r.json, {'status': 'bad input'}) self.assertEqual(r.status_code, 400) def test_no_name_post_api_endpoint(self): r = self.app.post('/api', content_type='application/json', data=json.dumps({'age': 100})) self.assertEqual(r.json, {'status': 'bad input'}) self.assertEqual(r.status_code, 400) def test_bad_age_post_api_endpoint(self): r = self.app.post('/api', content_type='application/json', data=json.dumps({'name': 'Den', 'age': '100'})) self.assertEqual(r.json, {'status': 'bad input'}) self.assertEqual(r.status_code, 400) if __name__ == '__main__': unittest.main()

Coverage Output:

coverage report Name Stmts Miss Cover ------------------------------------------------------------------ src/app.py 28 2 93% src/tests.py 37 0 100% ------------------------------------------------------------------ TOTAL 65 2 96%

Now create our first action that will run the tests. According to the documentation, all actions should be stored in a special directory:

$ mkdir -p .github/workflows $ touch .github/workflows/test_on_push.yaml

I want this action to run on any push event in any branch, except for releases (tags, because there will be separate testing):

on: push: tags: - '!refs/tags/*' branches: - '*'

Then we create a task that will run in the latest available version of Ubuntu:

jobs: run_tests: runs-on: [ubuntu-latest]

In steps, we will check the code, install python, install dependencies, run tests and display coverage:

steps: # - uses: actions/checkout@master # python - uses: actions/setup-python@v1 with: python-version: '3.8' architecture: 'x64' - name: Install requirements # run: pip install -r requirements.txt - name: Run tests run: coverage run src/tests.py - name: Tests report run: coverage report

Together name: Run tests on any Push event # push , . # on: push: tags: - '!refs/tags/*' branches: - '*' jobs: run_tests: runs-on: [ubuntu-latest] steps: # - uses: actions/checkout@master # python - uses: actions/setup-python@v1 with: python-version: '3.8' architecture: 'x64' - name: Install requirements # run: pip install -r requirements.txt - name: Run tests run: coverage run src/tests.py - name: Tests report run: coverage report

Let's try to create a commit and see how our action works.

Passing tests in the Actions interface

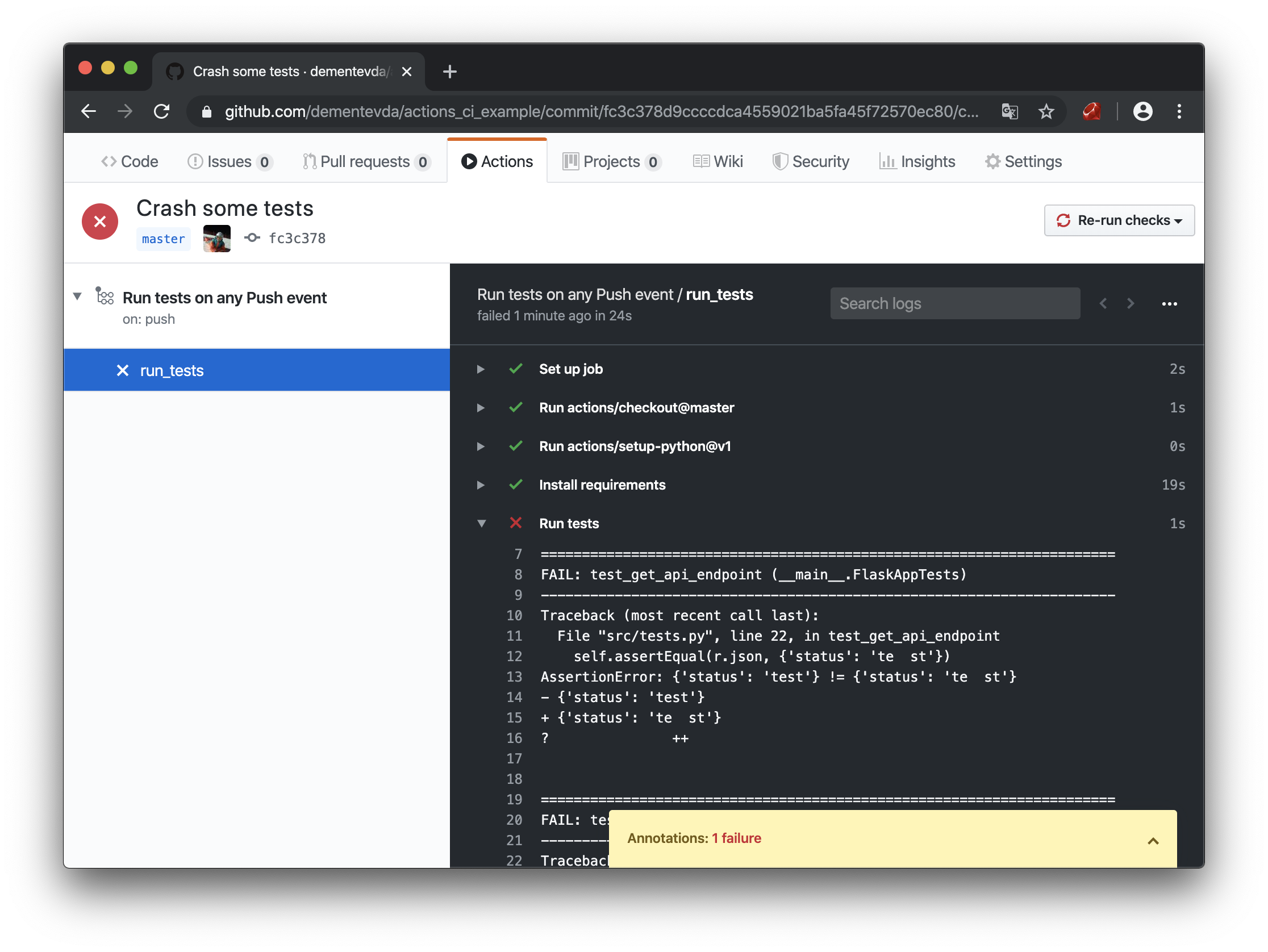

Hooray, we managed to create the first action and run it! Let's try to break some kind of test and look at the output:

Tests crash in the Actions interface

The tests failed. The indicator turned red and even received a notification in the mail. Exactly what is needed! 3 out of 8 points of the target scheme can be considered fulfilled. Now let's try to deal with the assembly, storage of our docker images.

Note! Next we need a docker account

First, we write a simple Dockerfile in which our application will be executed.

Dockerfile # FROM python:3.8-alpine # /app COPY ./ /app # RUN apk update && pip install -r /app/requirements.txt --no-cache-dir # ( Distutils) RUN pip install -e /app # EXPOSE 8080 # CMD web_server # distutils #CMD python /app/src/app.py

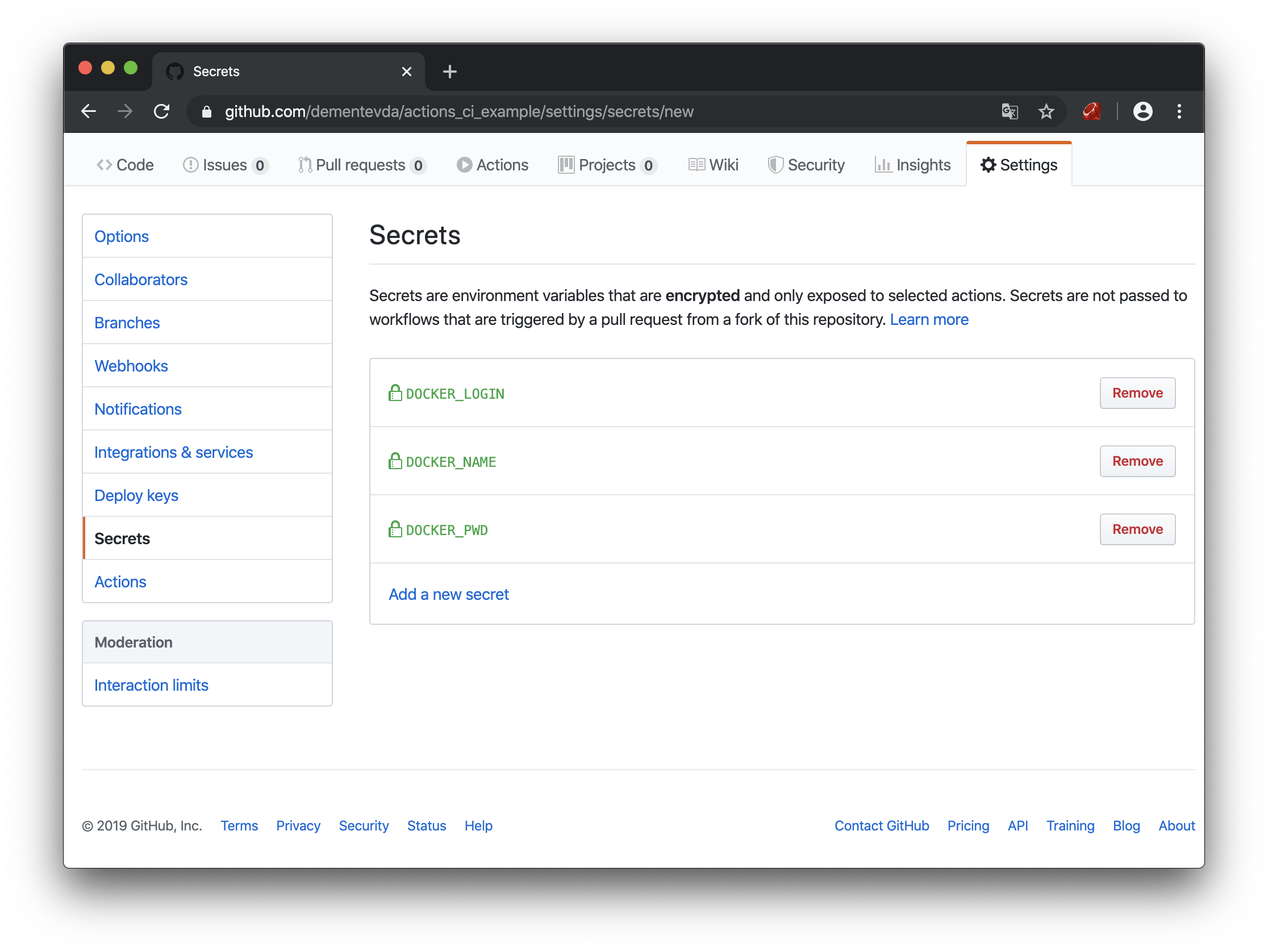

To send a container to the hub, it will be necessary to log in to the docker, but since I do not want the whole world to know the password for the account, I will use the secrets built into GitHub. In general, only passwords can be put in secrets, and the rest will be hardcoded in * .yaml, and this will work. But I would like to copy-paste my actions without changes, and to pull up all the specific information from the secrets.

Github Secrets

DOCKER_LOGIN - login in hub.docker.com

DOCKER_PWD - password

DOCKER_NAME - name of the docker repository for this project (must be created in advance)

Okay, the preparation is done, now let's create our second action:

$ touch .github/workflows/pub_on_release.yaml

We copy the testing from the previous action, with the exception of the launch trigger (I did not find how to import the actions). We replace it with “Launch on release”:

on: release: types: [published]

IMPORTANT! It is very important to make the right condition on.event

For example, if on.release does not specify types, then this event triggers at least 2 events: published and created. That is, 2 assembly processes will be launched immediately.

Now in the same file we will do another task dependent on the first:

build_and_pub: needs: [run_tests]

needs - says that this task will not start until run_tests ends

IMPORTANT! If you have several action files, and within them several tasks, then they all run simultaneously in different environments. A separate environment is created for each task, independent of other tasks. if you do not specify needs, then the testing task and assemblies will be launched simultaneously and independently of each other.

Add environment variables in which our secrets will be:

env: LOGIN: ${{ secrets.DOCKER_LOGIN }} NAME: ${{ secrets.DOCKER_NAME }}

Now the steps of our task, in them we must log in to the docker, collect the container and publish it in the registry:

steps: - name: Login to docker.io run: echo ${{ secrets.DOCKER_PWD }} | docker login -u ${{ secrets.DOCKER_LOGIN }} --password-stdin - uses: actions/checkout@master - name: Build image run: docker build -t $LOGIN/$NAME:${GITHUB_REF:11} -f Dockerfile . - name: Push image to docker.io run: docker push $LOGIN/$NAME:${GITHUB_REF:11}

$ {GITHUB_REF: 11} is a github variable that stores a line with a reference to the event for which the trigger worked (branch name, tag, etc.), if we have tags of the format "v0.0.0" then you need to trim the first 11 characters, then "0.0.0" remains.

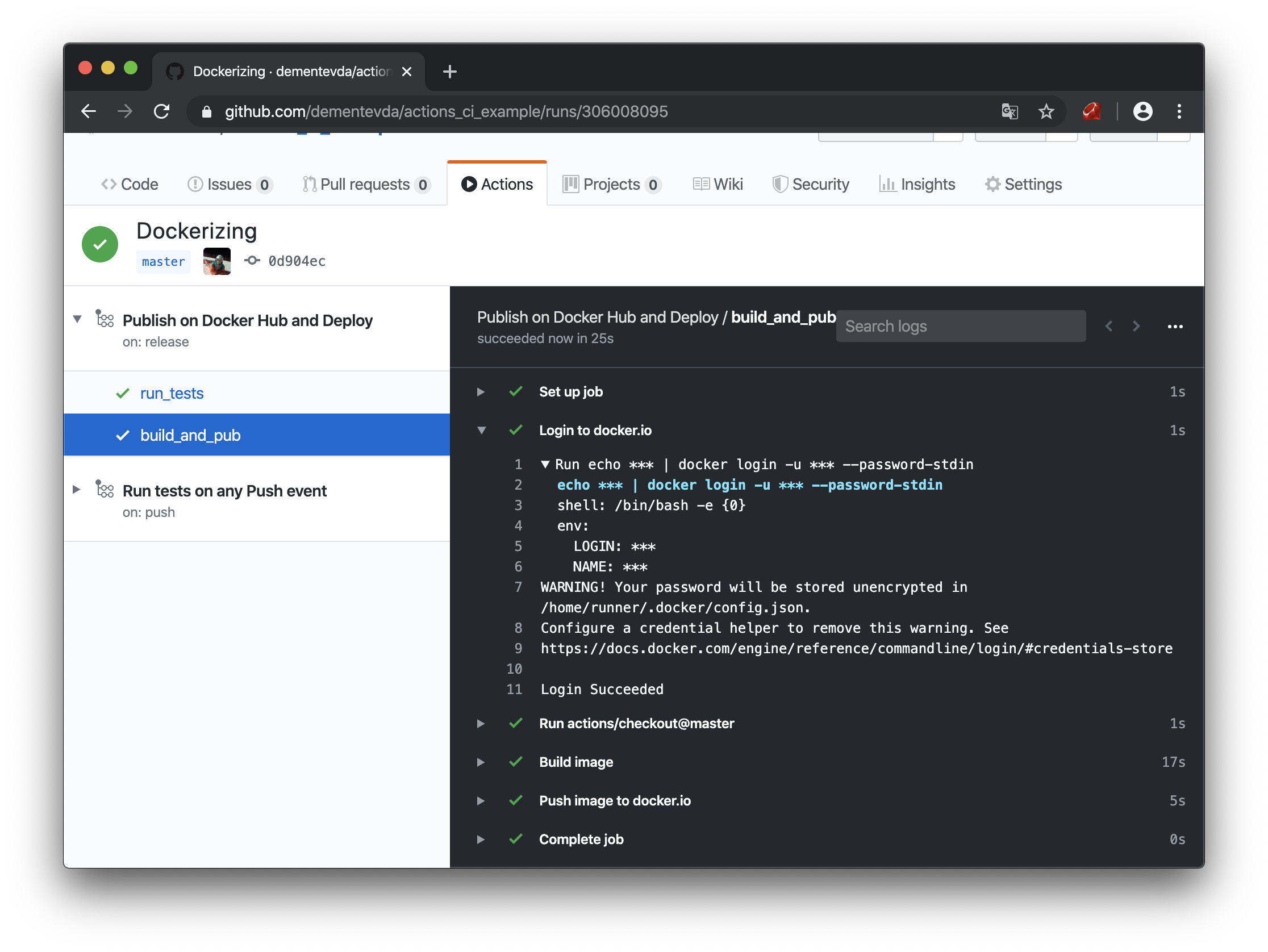

Push the code and create a new tag. And we see that our container was successfully assembled and sent to the registry, and we did not light up our password anywhere.

Build and ship container in Actions interface

Check the hub:

Container stored in docker hub

Everything works, but the most difficult task remains - deployment. Here you will already need a VPS and a white IP address where we will deploy the container and where you can send the hook. In theory, on the side of the VPS or the home server, you can run a script on the crown, which in the case of a new image would fire it, or somehow play with the telegram bot. Surely there are tons of ways to do this. But I will work with external ip. In order not to be smart, I wrote a small web service in Flask with a simple API.

In short, there is 1 endpoint “/”.

A GET request returns json with all active containers on the host.

POST - receives data in the format:

{ "owner": " ", "repository": " ", "tag": "v0.0.1", # "ports": {"8080": 8080, “443”: 443} # }

What happens on the host:

- from the received json the name of the new image is collected

- a new image is bulging

- if the image has been downloaded, then the current container is stopped and deleted

- a new container is launched, with the publication of ports (-p flag)

All work with docker is done using the docker-py library

It would be very wrong to publish such a service on the Internet without any minimal protection, and I made a semblance of API KEY, the service reads the token from environment variables, and then compares it with the header {Authorization: CI_TOKEN}

Web server listing for deployment For this application, I also did setup.py to install it on the system. You can install it using:

$ python3 setup.sy install

provided that you have downloaded the files and are in the directory of this application.

After installation, you must enable the application as a service so that it starts itself in case of a server reboot, for this we use systemd

Here is an example configuration file:

[Unit] Description=Deployment web server After=network-online.target [Service] Type=simple RestartSec=3 ExecStart=/usr/local/bin/ci_example Environment=CI_TOKEN=#<I generate it with $(openssl rand -hex 20)> [Install] WantedBy=multi-user.target

It remains only to run it:

$ sudo systemctl daemon-reload $ sudo systemctl enable ci_example.service $ sudo systemctl start ci_example.service

You can view the log of the web delivery server using the command

$ sudo systemctl status ci_example.service

The server part is ready, it remains only to add a hook to our action. To do this, add the secrets of the IP address of our server and CI_TOKEN which we generated when we installed the application.

At first I wanted to use the ready-made action for curl from the github marketplace, but unfortunately, it removes the quotes from the body of the POST request, which made it impossible to parse json. This obviously didn’t suit me, and I decided to use the built-in curl in ubuntu (on which I collect containers), which incidentally had a positive effect on performance, because it does not require building an additional container:

deploy: needs: [build_and_pub] runs-on: [ubuntu-latest] steps: - name: Set tag to env run: echo ::set-env name=TAG::$(echo ${GITHUB_REF:11}) - name: Send webhook for deploy run: "curl --silent --show-error --fail -X POST ${{ secrets.DEPLOYMENT_SERVER }} -H 'Authorization: ${{ secrets.DEPLOYMENT_TOKEN }}' -H 'Content-Type: application/json' -d '{\"owner\": \"${{ secrets.DOCKER_LOGIN }}\", \"repository\": \"${{ secrets.DOCKER_NAME }}\", \"tag\": \"${{ env.TAG }}\", \"ports\": {\"8080\": 8080}}'"

Note: it is very important to specify the --fail switch, otherwise any request will succeed, even if an error was received in response.

It is also worth noting that the variables used in the request are not really variables, but special functions called with the exception of GITHUB_REF, which is why for a long time I could not understand why the request was not working correctly. But having made a function out of it, everything worked out.

Action builds and deploy name: Publish on Docker Hub and Deploy on: release: types: [published] # jobs: run_tests: # runs-on: [ubuntu-latest] steps: # - uses: actions/checkout@master # python - uses: actions/setup-python@v1 with: python-version: '3.8' architecture: 'x64' - name: Install requirements # run: pip install -r requirements.txt - name: Run tests # run: coverage run src/tests.py - name: Tests report run: coverage report build_and_pub: # needs: [run_tests] runs-on: [ubuntu-latest] env: LOGIN: ${{ secrets.DOCKER_LOGIN }} NAME: ${{ secrets.DOCKER_NAME }} steps: - name: Login to docker.io # docker.io run: echo ${{ secrets.DOCKER_PWD }} | docker login -u ${{ secrets.DOCKER_LOGIN }} --password-stdin # - uses: actions/checkout@master - name: Build image # image hub.docker .. login/repository:version run: docker build -t $LOGIN/$NAME:${GITHUB_REF:11} -f Dockerfile . - name: Push image to docker.io # registry run: docker push $LOGIN/$NAME:${GITHUB_REF:11} deploy: # registry, # curl needs: [build_and_pub] runs-on: [ubuntu-latest] steps: - name: Set tag to env run: echo ::set-env name=RELEASE_VERSION::$(echo ${GITHUB_REF:11}) - name: Send webhook for deploy run: "curl --silent --show-error --fail -X POST ${{ secrets.DEPLOYMENT_SERVER }} -H 'Authorization: ${{ secrets.DEPLOYMENT_TOKEN }}' -H 'Content-Type: application/json' -d '{\"owner\": \"${{ secrets.DOCKER_LOGIN }}\", \"repository\": \"${{ secrets.DOCKER_NAME }}\", \"tag\": \"${{ env.RELEASE_VERSION }}\", \"ports\": {\"8080\": 8080}}'"

Okay, we put it all together, now we’ll create a new release and look at the actions.

Webhook to deployment application Actions interface

Everything turned out, we make a GET request to the deployment service (displays all active containers on the host):

Deployed on VPS container

Now we will send requests to our deployed application:

GET request to deployed container

POST request to deployed container

conclusions

GitHub Actions is a very convenient and flexible tool with which you can do many things that can greatly simplify your life. It all depends on the imagination.

They support integration testing with services.

As a logical continuation of this project, you can add to webhook the ability to pass custom parameters to launch the container.

In the future I will try to take as a basis this project for the deployment of helm charts when I study and experiment with k8s

If you have some kind of home project, then GitHub Actions can greatly simplify working with the repository.

Syntax details can be found here.

All project sources