We

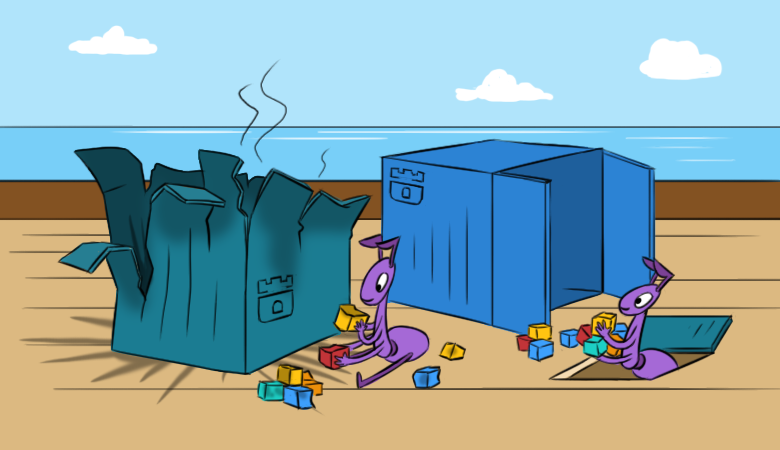

already talked about how / why we like Rook: to a significant extent, it simplifies working with storage in Kubernetes clusters. However, with this simplicity, certain difficulties come. We hope that the new material will help to better understand such difficulties before they even manifest themselves.

And to read it was more interesting, we start with the

consequences of a hypothetical problem in the cluster.

"Everything is lost!"

Imagine that you once configured and launched Rook in your K8s cluster, he was pleased with his work, but at some “wonderful” moment the following happens:

- New pods cannot mount RBD images from Ceph.

- Commands like

lsblk and df do not work on Kubernetes hosts. This automatically means “something is wrong” with the node-mounted RBD images. I can’t read them, which indicates the inaccessibility of monitors ... - Yes, there are no operational monitors in the cluster. Moreover - there are not even pods with OSD, nor MGR pods.

When was the pod

rook-ceph-operator launched? Not so long ago as he was deployed. Why? Rook-operator decided to make a new cluster ... How can we now restore the cluster and the data in it?

To get started, let's go a

longer interesting way, having carried out a thoughtful investigation into the “internals” of Rook and a step-by-step restoration of its components. Of course, there is also a

shorter correct way: using backups. As you know, admins are divided into two types: those who don’t do backups, and those who already do them ... But more about this after the investigation.

A little practice, or a long way

Take a look and restore the monitors

So, let's look at the list of ConfigMaps: there are

rook-ceph-config and

rook-config-override necessary for backup. They appear upon successful deployment of the cluster.

NB : In new versions, after the adoption of this PR , ConfigMaps have ceased to be an indicator of the success of a cluster deployment.To perform further actions, we need a hard reboot of all servers that have mounted RBD images (

ls /dev/rbd* ). It must be done through sysrq (or "on foot" to the data center). This requirement is caused by the task of disconnecting mounted RBDs, for which a regular reboot will not work (it will unsuccessfully try to unmount them normally).

The theater begins with a hanger, and the Ceph cluster begins with monitors. Let's look at them.

Rook mounts the following entities in the monitor pod:

Volumes: rook-ceph-config: Type: ConfigMap (a volume populated by a ConfigMap) Name: rook-ceph-config rook-ceph-mons-keyring: Type: Secret (a volume populated by a Secret) SecretName: rook-ceph-mons-keyring rook-ceph-log: Type: HostPath (bare host directory volume) Path: /var/lib/rook/kube-rook/log ceph-daemon-data: Type: HostPath (bare host directory volume) Path: /var/lib/rook/mon-a/data Mounts: /etc/ceph from rook-ceph-config (ro) /etc/ceph/keyring-store/ from rook-ceph-mons-keyring (ro) /var/lib/ceph/mon/ceph-a from ceph-daemon-data (rw) /var/log/ceph from rook-ceph-log (rw)

Let's see what the secret of

rook-ceph-mons-keyring :

kind: Secret data: keyring: LongBase64EncodedString=

We decode and get the usual keyring with rights for the admin and monitors:

[mon.] key = AQAhT19dlUz0LhBBINv5M5G4YyBswyU43RsLxA== caps mon = "allow *" [client.admin] key = AQAhT19d9MMEMRGG+wxIwDqWO1aZiZGcGlSMKp== caps mds = "allow *" caps mon = "allow *" caps osd = "allow *" caps mgr = "allow *"

Remember. Now look at the keyring in the secret

rook-ceph-admin-keyring :

kind: Secret data: keyring: anotherBase64EncodedString=

What is in it?

[client.admin] key = AQAhT19d9MMEMRGG+wxIwDqWO1aZiZGcGlSMKp== caps mds = "allow *" caps mon = "allow *" caps osd = "allow *" caps mgr = "allow *"

Same. Let's see more ... Here, for example, is the secret of

rook-ceph-mgr-a-keyring :

[mgr.a] key = AQBZR19dbVeaIhBBXFYyxGyusGf8x1bNQunuew== caps mon = "allow *" caps mds = "allow *" caps osd = "allow *"

In the end, we find a few more secrets in ConfigMap

rook-ceph-mon :

kind: Secret data: admin-secret: AQAhT19d9MMEMRGG+wxIwDqWO1aZiZGcGlSMKp== cluster-name: a3ViZS1yb29r fsid: ZmZiYjliZDMtODRkOS00ZDk1LTczNTItYWY4MzZhOGJkNDJhCg== mon-secret: AQAhT19dlUz0LhBBINv5M5G4YyBswyU43RsLxA==

And this is the initial list with keyring, where all the secrets described above come from.

As you know (see

dataDirHostPath in the

documentation ), Rook stores this data in two places. Therefore, let's go to the nodes to look at the keyring'y lying in the directories that are mounted in pods with monitors and OSD. To do this, find the nodes

/var/lib/rook/mon-a/data/keyring and see:

# cat /var/lib/rook/mon-a/data/keyring [mon.] key = AXAbS19d8NNUXOBB+XyYwXqXI1asIzGcGlzMGg== caps mon = "allow *"

Suddenly, the secret turned out to be different - not like in ConfigMap.

What about the admin keyring? We also have it:

# cat /var/lib/rook/kube-rook/client.admin.keyring [client.admin] key = AXAbR19d8GGSMUBN+FyYwEqGI1aZizGcJlHMLgx= caps mds = "allow *" caps mon = "allow *" caps osd = "allow *" caps mgr = "allow *"

Here is the problem. There was a failure: the cluster was recreated ... but in reality not.

It becomes clear that the newly generated keyring is stored in secrets, and they are

not from our old cluster. Therefore:

- we take keyring from the monitor from the file

/var/lib/rook/mon-a/data/keyring (or from backup); - change the keyring in the secret

rook-ceph-mons-keyring ; - register keyring from the admin and monitor in ConfigMap'e

rook-ceph-mon ; - remove pod controllers with monitors.

The miracle will not take long: monitors will appear and start up. Hooray, a start!

Restore OSD

We go to the pod

rook-operator : calling

ceph mon dump shows that all monitors are in place, and

ceph -s that they are in a quorum. However, if you look at the OSD tree (

ceph osd tree ), you will see something strange in it: OSD's started to appear, but they are empty. It turns out that they also need to be somehow restored. But how?

Meanwhile,

rook-ceph-config and

rook-config-override , as well as many other ConfigMaps with names of the form

rook-ceph-osd-$nodename-config , appeared in ConfigMap's so needed. Let's look at them:

kind: ConfigMap data: osd-dirs: '{"/mnt/osd1":16,"/mnt/osd2":18}'

Everything is wrong, everything is mixed up!

Scale the operator pod to zero, delete the generated Deployment pods from the OSD, and fix these ConfigMaps. But where to get the

correct OSD map by nodes?

- Let's try to delve into the

/mnt/osd[1-2] directories on the nodes again - in the hope that we can catch hold of something there. - There are 2 subdirectories in the

/mnt/osd1 directory: osd0 and osd16 . The last one is just that ID that is specified in ConfigMap (16)? - Check the size and see that

osd0 much larger than osd16 .

We conclude that

osd0 is the required OSD, which was specified as

/mnt/osd1 in ConfigMap (we use

directory based osd .)

Step by step, we check all the nodes and edit ConfigMap's. After all the instructions, you can run the pod of the Rook operator and read its logs. And everything is wonderful in them:

- I am a cluster operator;

- I found drives on nodes;

- I found monitors;

- monitors became friends, i.e. formed a quorum;

- running OSD deployments ...

Let's go back to the pod of the Rook operator and check the cluster liveness ... yes, we made a little mistake with the conclusions about the OSD names on some nodes! It doesn’t matter: they again corrected ConfigMaps, deleted the extra directories from the new OSDs and came to the long-awaited state

HEALTH_OK !

Check the images in the pool:

Everything is in place - the cluster is saved!

I am lazy doing backups, or the Fast Way

If backups were done for Rook, then the recovery procedure becomes much simpler and boils down to the following:

- Scale to zero deployment of the Rook operator;

- We remove all deployments except the Rook operator;

- Restore all secrets and ConfigMaps from backup;

- Restore the contents of the

/var/lib/rook/mon-* on the nodes; - Restore (if suddenly lost) CRD

CephCluster , CephFilesystem , CephBlockPool , CephNFS , CephObjectStore ; - Scale the deployment of the Rook operator back to 1.

Useful Tips

Make backups!

And in order to avoid situations when you need to recover from them:

- Before large-scale work with the cluster, consisting in server reboots, scale the Rook operator to zero so that it does not do too much.

- On monitors, add nodeAffinity in advance.

- Pay attention to the pre- setting of timeouts

ROOK_MON_HEALTHCHECK_INTERVAL and ROOK_MON_OUT_TIMEOUT .

Instead of a conclusion

It makes no sense to argue that Rook, being an additional “layer” (in the general scheme of organizing storage in Kubernetes), as much simplifies, it also adds new difficulties and potential problems in the infrastructure. The thing remains “small”: to make an informed, informed choice between these risks on the one hand and the benefits that the solution brings in your particular case, on the other.

By the way, the section “Adopt an existing Rook Ceph cluster into a new Kubernetes cluster” has recently

been added to the Rook documentation. It describes in more detail what needs to be done in order to move existing data to a new Kubernetes cluster or to restore a cluster that has collapsed for one reason or another.

PS

Read also in our blog: